I'm starting a new regular monthly postly theme - TIL ${MONTH}. Although I've mostly disappeared from the social networks I still have a strong urge to share some curous discovery throughout the month.

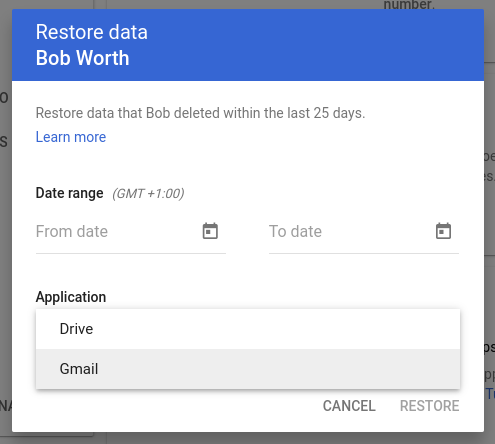

Google Workspace admin can restore data removed by user

It was switched on by default, I guess it won't be very suprising that admin has these level of intervention but still, huh.

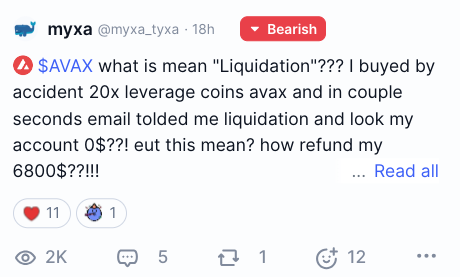

Great quotes from the wanna-be-crypto degens

(post from the coinmarketcap)

It must be mistake, DeFi LLC return my lost funds!

Experiments with Stable Diffusion, Stable Diffusion Video and Video interpolator models

I've been experimenting with these 3 models for the idea of the potential project. I still get very amased every time these drawing models product such a quality result, unbelivable results in seconds even on my very basic hardware.

Example pipeline:

-

(seed prompt) In ChatGPT4 I requested to generate number of verbose descriptive prompts for the Stable Diffusion (and result was eh - it doesn't know what prompt format is expected, maybe intentionally)

-

(source image) Produce still image from the prompt using Stable Diffusion-based model

theme:creepy cities and towns without people

prompt:Deserted medieval town under a full moon, cobblestone streets empty and buildings dark. A light fog rolls through, and the shadows of absent inhabitants seem to flicker.

- (intermediate result) Produce a 25 frames of the 'video'. It's more like slide show since model stable-video-diffusion-img2vid-xt-1-1 can produce that many frames. It could be a 1 second video with 25 fps or 5 seconds video with 5 fps. Here is a example of the 6fps:

- (final result) Fill the missing frames(30-6=24) by interpolating the two closest frames using the AMT motel. That produced pretty good result, you can't spot transition between the frames no visible artefacts at all.

That was fun experiment, it took me about 4 hours to build the pipeline in notebook even without prior experience working with huggingface tools directly in python