Replit is great

Despite them being jerks about incorrectly issued invoices, the platform is just amazing. It's never been this easy for the average Joe to build something custom from scratch.

The experience is so opposite to those posts from stubborn people who probably assume that the peak of AI these days is the free version of GitHub Copilot—and who likely haven't put any effort into learning how to prompt or provide context. You often find these posts have a large like-minded following of very important developers looking for confirmation that their job isn’t going away, so they can keep writing their sacred unit tests chasing 99% code coverage while presenting “breakthrough” results at yet another conference.

I kinda like that AI is actively putting snob developers in their place, shifting focus to people who actually get shit done.

My contractor Claude says...

Great! We've made significant progress. The build time improved from 2.5 minutes to about 7.5 minutes, but we still have some issues to fix. Let me address the remaining problems.

More is better, isn’t it?

Local agentic LLM sucks

In one of the experiments, I tried to use opensource/openweights solutions to see if they could at match Sonnet 3.5(at least).

Given:

- GPU: 8×4090

- roo code

- "top" models:

Qwen2.5-Coder-32B-Instruct-Q6_K(60GB VRAM),DeepSeek-R1-Distill-Llama-70B-Q5_K_S.gguf(90GB VRAM) with 128k context - task type: expose some blockchain data via API

TLDR: laughable output, wasted time and money.

I knew I shouldn’t expect much, so I prompted roo to implement a very specific, tiny feature - add an endpoint alongside an existing one (essentially implement just by example, added context7 MCP for documentations so no guess game involved):

Qwen2.5-Coder-32B-Instruct-Q6_K— kind of gave up, started messing with the code, then Roo complained the output was malformed. The good thing? It only wasted 30 minutes.DeepSeek-R1-Distill-Llama-70B-Q5_K_S.gguf— completed the feature, and it actually worked, although part of the prompt was ignored. But hey, it took just under one hour of 8×4090 to almost complete a task that even Grok3-mini (free via Cursor) would do in a split second.

And the code it produced was hilarious:

try:

subnets_info = app_state.subtensor.get_all_subnets_info()

import dataclasses # import(1)

subnets_data = [dataclasses.asdict(subnet_info) for subnet_info in subnets_info]

import dataclasses # import(2) just in case

import json

from dataclasses import asdict # import(3) why not?

def serialize_balance(obj): # unused implementation #1

if isinstance(obj, bt.Balance):

return float(obj)

raise TypeError(f"Object of type {obj.__class__.__name__} is not JSON serializable")

import dataclasses # import(4) more is better, right?

from dataclasses import asdict # import(5) nobody dies if we add one more import just to be sure?

def serialize_balance(obj): # unused implementation #2 — even if it’s not in use, so what?

if isinstance(obj, bt.Balance):

return float(obj)

raise TypeError(f"Object of type {obj.__class__.__name__} is not JSON serializable")

from fastapi.encoders import jsonable_encoder

subnet_dicts = [subnet_info.__dict__ for subnet_info in subnets_info]

subnet_dicts = [{k: str(v) for k, v in d.items()} for d in subnet_dicts]

return JSONResponse(content=jsonable_encoder(subnet_dicts)) # return here

return JSONResponse(content=subnets_data) # but if somehow return above didn’t work (I don’t trust Python either), here’s a backup return

except HTTPException as e:Clearly, the wet dreams about autonomous open-weight AI agents that can be left alone for a day to iteratively brute-force the solution still live in utopia. It's not the only thing I've attempted to implement - I spent about 4 days trying to streamline work with these models but frankly unless you're enthusiasic it's just waste of time and money comparing to top models (Gemini 3, Claude 3.8+, GPT 4.1 and especially o3)

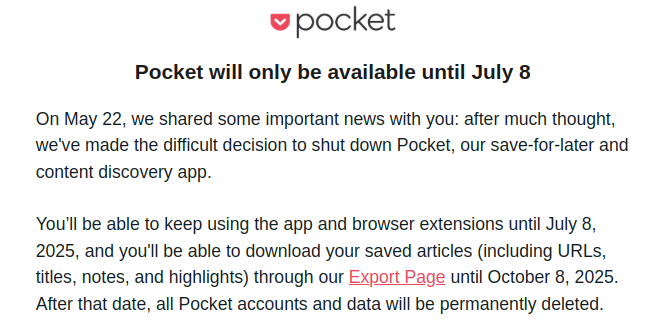

Google Reader Pocket is being shut down

Ironically, the very same Pocket is now being shut down. When Google Reader was discontinued, it was a big panic in my bubble - back in 2010–2012, RSS was a huge part of life, along with personal blogs (for zoomers - these blogs run on your own domain, not on social media). At the time, the news were shocking, but hey, Google has always loved the “kill it” button.

Pocket took over the RSS - well, to some extent.

You know the saying, "Pocket is a safe storage for articles you’ll never read"? It definitely holds true for me.

Either way, time to say farewell to Pocket.